From Zero to Beam

Categories

Moving from in-house streaming code to a flexible and portable solution with Apache Beam

Long gone are the days when we used to consume data with Apache Spark Streaming, with an overly complicated, cloud-dependent infrastructure that was non-performant when load increased dramatically. Follow us on a journey of stack simplification, learning, and performance improvement within Empathy.co’s data pipeline.

A Blast from the Past

Our data journey began in 2017, building a full-fledged AWS-based pipeline, steadily consuming events from multiple sources, wrapping those into small batches of JSON events, and sending them on to the Spark Streaming consumer.

Here’s a simplified view of what that exact streaming side of things looked like - together with the important bits of infrastructure, such as queues where events would be written:

Main technologies found in the old stack:

- SQS: Simple Queue Service, Amazon’s managed message queuing service mainly used to asynchronously send, store, and retrieve multiple messages of various sizes.

Single events were stored in queue #1, awaiting their processing by the event-wrapper. - SNS: Simple Notification Service, Amazon’s web service that makes it easy to set up, operate, and send notifications from the cloud.

We used it to notify other infrastructure elements that a new batch of events wrapped by event-wrapper was ready to be pulled and consumed. - Parquet: An open-source, column-oriented data file format designed for efficient data storage and retrieval. It provides efficient data compression and encoding schemes with enhanced performance to handle complex data in bulk.

A data lake was built with all the Parquet files generated by this part of the infrastructure. We then continued to generate analytics from them via Apache Spark batch jobs.

In-house services:

- Event wrapper: In charge of taking individual events written into the queue by previous services, merging them into a bigger set in a single JSON file, and forwarding them into another queue.

- Spark consumer: constantly pulling the queue 10 messages at a time, parsing those into Parquet files for later processing by subsequent batch processes.

There’s more to it than what’s seen in the diagram, but that should give you an idea of how things looked back then - and yes, it was all built on AWS with little room for portability, without making major changes to the whole set of services which composed this part of the pipeline.

Some cons found in this solution:

- Scalability: Major sales or special events such as Black Friday were a headache when traffic went through the roof, as it was not easy to increase the throughput in the consumer. This resulted in delayed events and visualizations not being updated in time.

- Portability: Having so many AWS bits underneath made the system hard to migrate into another cloud, and finding alternative equivalent services became a major pain point that needed to be solved urgently.

- In-house connector: Pulling SQS from Spark Streaming was done in-house and was kept apart from all the parsing logic, so the data would fit the schema defined for raw data.

You may be wondering, “Why not use Beam from the beginning? It was released in 2016!” Well, given the experience the team had with Apache Spark, it made sense to go with the much-proven Spark Streaming rather than making the switch to a technology that was still in its early stages.

Winds of Change

Despite the cons, we managed to improve its performance over time, making it a bit more capable of handling higher loads of traffic without missing a beat.

However, the portability pain point was still there and wasn’t an issue so long as we didn’t use any other cloud… But given certain requirements, an extension of the components to support Google Cloud Platform was on its way to broaden the offering, and it was the perfect time to explore alternatives and simplify the system for the better! After a thorough analysis, we came to the conclusion that, given our expertise at the time, Apache Beam was the way to go.

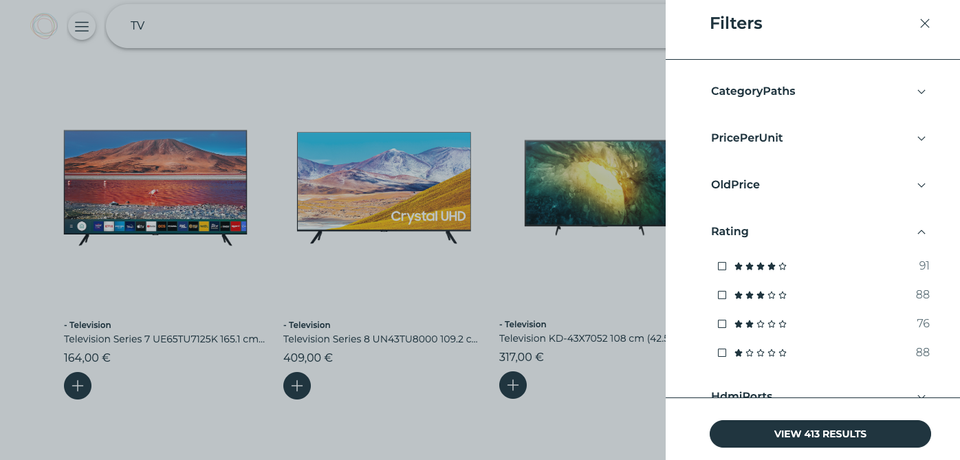

Something which used to be overly complicated and really dependent on infrastructure soon looked something like this:

What is Apache Beam?

According to the Apache Beam website itself:

“The easiest way to do batch and streaming data processing. Write once, run anywhere data processing for mission-critical production workloads.”

In short, it is a unified API to build data processing components which can compile a single codebase into Flink, Spark, and many other systems. Check out the full list of supported runners.

Pick your language of choice and you’re pretty much ready to write code that will easily and transparently translate into a runner. The official docs have everything you need to know!

Some pros that made our decision way easier:

- Multiple languages: Ability to write code in multiple languages such as Java, Python, and Scala and still get a portable pipeline out of it.

- Huge range of connectors: Out-of-the-box connectors for Kinesis, PubSub, and Kafka are already provided, along with lots of community support that develop and maintain them.

- Ease of development: Writing code in Apache Beam does have a certain learning curve, but once you get used to it, it’s really intuitive.

However, there are some cons worth mentioning also:

- Performance corner cases: Being capable of morphing into multiple languages means that the one-framework-for-all concept probably isn’t as performant as a native solution written purely in Flink, for example.

- Unable to update to the latest connector driver whenever you want: Drivers are embedded within Apache Beam and released accordingly. Until it’s properly tested, the latest version of the driver library won’t be really used, and may be a hit or miss for things like vulnerabilities.

As soon as we decided that Apache Beam would replace our Spark Streaming implementation, we started to dig into what other parts of the infrastructure needed to be changed in order to optimize it. First step: the connector to the queue.

The Pipeline as the Baseline

The base of every Apache Beam job is the Pipeline, which is defined as “a user-constructed graph of transformations that defines the desired data processing operations.”

Pipeline p = Pipeline.create(options);

p.apply("ReadLines", TextIO.read().from(options.getInputFile()))

As soon as you’ve got data flowing through the pipe (that initial TextIO step) we can start modifying our data however we wish.

TextIO in this sample will be our connector of choice, which basically reads files from a given location within the machine or remotely (S3 and GS buckets for instance) and that will give us a collection of strings in Beam named PCollection which is a “data set or data stream. The data that a pipeline processes is part of a PCollection.”

As soon as you build that initial and crucial step in your pipeline, you are ready to start adding more stages that operate on the aforementioned PCollection.

The apache/beam GitHub repository has plenty of examples to play with, so take a look! Also, the Programming Guide on the official website has everything you need to get up to speed on developing your very own pipelines. All the concepts mentioned in here (and more!) are covered in detail.

From SQS to Kinesis and Beyond

Initially, we were using a mix of SQS and SNS with S3 buckets to persist the events midway, sending notifications via SNS whenever a new batch of events was ready to be pulled from Spark. This was not ideal by any means and it complicated the overall portability and maintainability of the system, among other things.

We wanted a real-time stream of events from beginning to end, with the ability to replay messages in case we wanted to and remove all the unnecessary complexity of maintaining midway buckets and notifications. The solution, given the climate at the time, was clear: Kinesis.

For those of you that don’t know what Kinesis is, it's basically AWS’s real-time streaming offering. The benefits, in addition to it being real-time, are that it is highly scalable and fully managed, which removes a lot of operability overhead - this was a decisive factor for us.

The initial development of the latest jobs contemplated code that would run on GCP Dataflow and Flink on Kubernetes.

Reliable, Fast and Portable - What Else?

By applying the concepts seen in the documentation above, we built our pipelines - all in a streaming fashion - which save the records processed in both Parquet files and MongoDB collections with a great throughput.

It is easily scalable when high traffic season comes (i.e. Black Friday or sales) and portable through clouds. Hope you enjoyed this brief intro to Apache Beam and the transition from a really cloud dependant environment to a more agnostic one!